Bias & discrimination

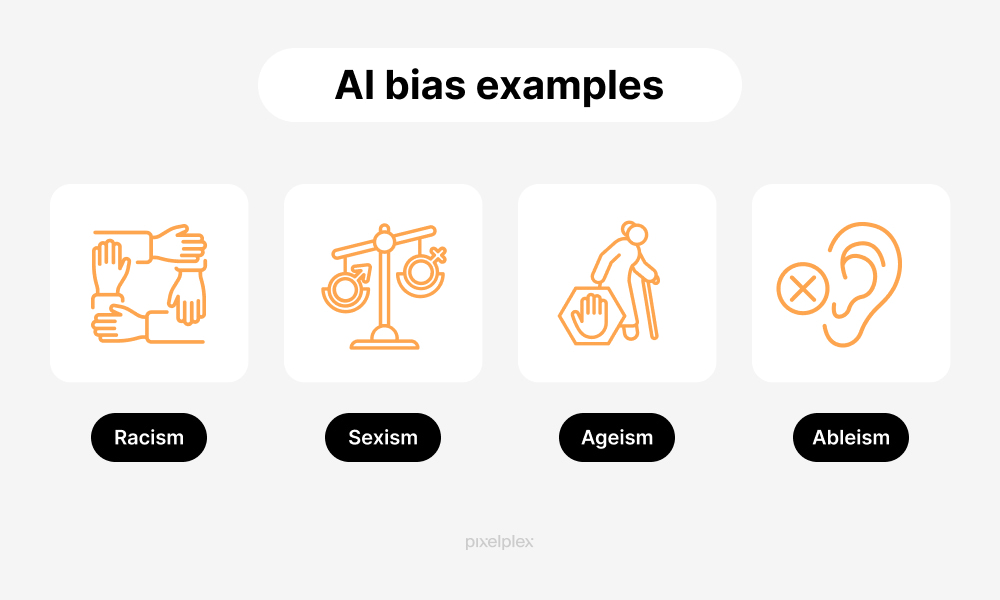

All of the AI models contain Bias and Discrimination to minority, including racial bias, sexical bias, etc. Caused by the training data from the internet that include a lot of discrimination words.

Read More

The complexities of AI and the dangers of a single narrative.

Imagine a world where artificial intelligence has replaced most human jobs. This scenario, often portrayed in science fiction, captures the public's attention and fuels a variety of fears about the future of AI. But when we took a deeper understanding of AI, this is just one narrative, one single story, the concept of 'The Risk of the Single Story' by Chimamanda Ngozi Adichie and how it applies to the complex world of AI, AI contains both advantages and disadvantages.

AI’s journey began in the 1950s with the first concepts of 'thinking machines,' evolving through decades into today’s sophisticated algorithms that power everything from your smartphone’s voice assistant to autonomous vehicles. We'll look at different epochs in AI development: the early theoretical concepts, the AI winters, the renaissance fueled by big data and machine learning, and today's burgeoning applications in diverse fields.

While AI brings numerous benefits such as autonomous cars and performing tasks, it also raises significant concerns. Privacy invasions, biased algorithms, and job displacement are just a few of the challenges. These issues are often sidelined by either overt optimism or dystopian fears, demonstrating a classic example of the risk of a single story. How we address these concerns, integrating multiple stories and perspectives, will largely shape AI’s role in our future society.

All of the AI models contain Bias and Discrimination to minority, including racial bias, sexical bias, etc. Caused by the training data from the internet that include a lot of discrimination words.

Read MoreWe need to admit that AI brings a lot of convieneces to our daily life, such as teaching me hard problems that I don't know how to solve, and helps me scheduling my time.

Read MoreAll of AI models contains those Biases and Discriminations, it will caused a lot of unfairness to those lovely people, and wil cause more society problems.

Read MoreAlthough AI brought a lot of benefits to us, it has also highlighted critical issues of bias and discrimination within these systems. This is particularly evident in large language models (LLMs) like GPT-4.

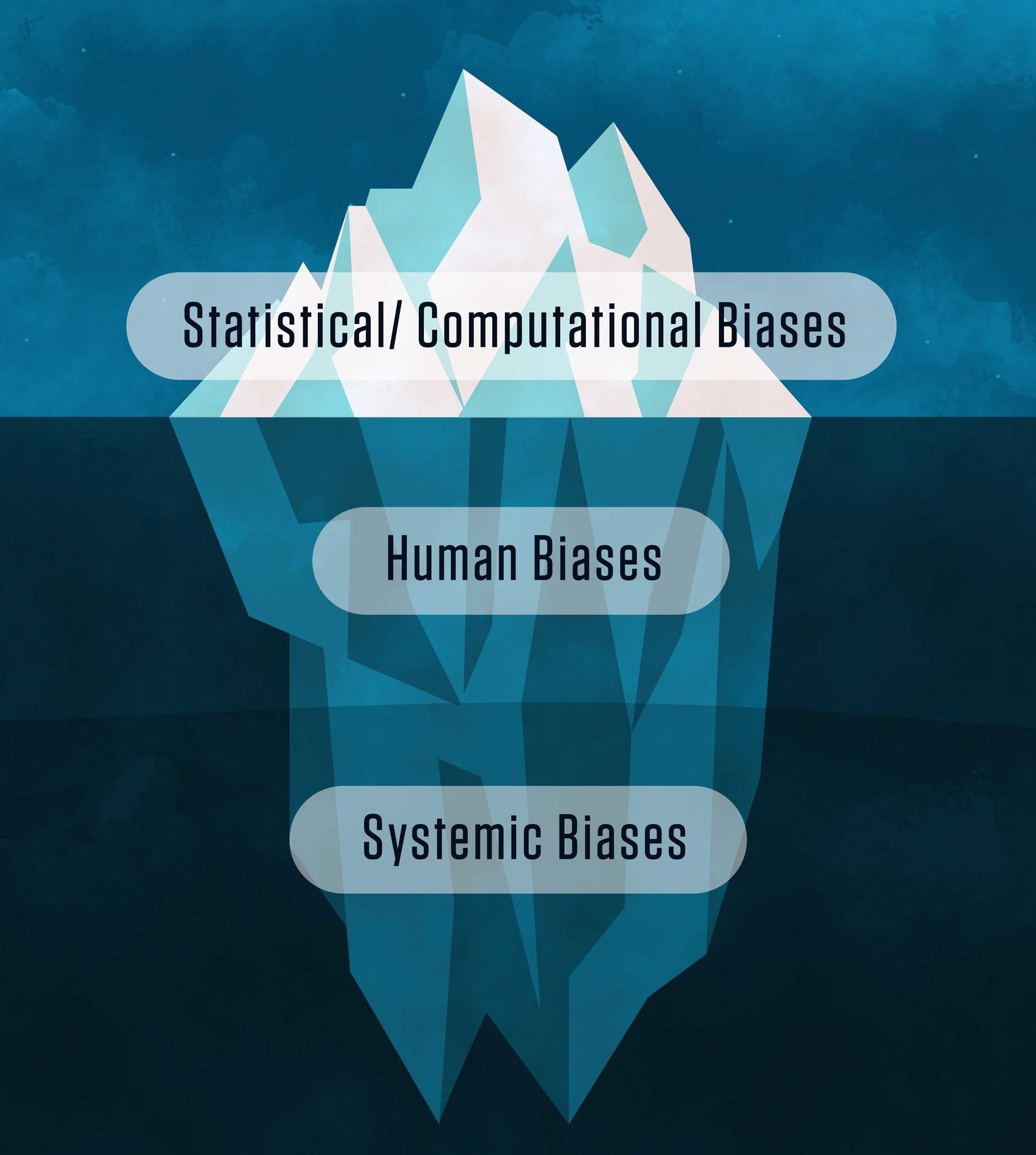

The biases in AI systems stem from various sources. Firstly, the data used to train these models often reflects existing social biases. If a dataset contains more positive associations with one gender or race over another, the AI model will learn these associations. Secondly, the algorithms themselves can introduce biases. Certain design choices in AI systems, such as how they weigh different pieces of data, can inadvertently favor certain groups over others.

The impact of biased AI is profound. In criminal justice, biased algorithms can lead to unfair sentencing and parole decisions. In hiring processes, AI systems might favor certain demographics over others, perpetuating workplace inequality. In healthcare, biased AI can result in unequal treatment recommendations for patients from different backgrounds.

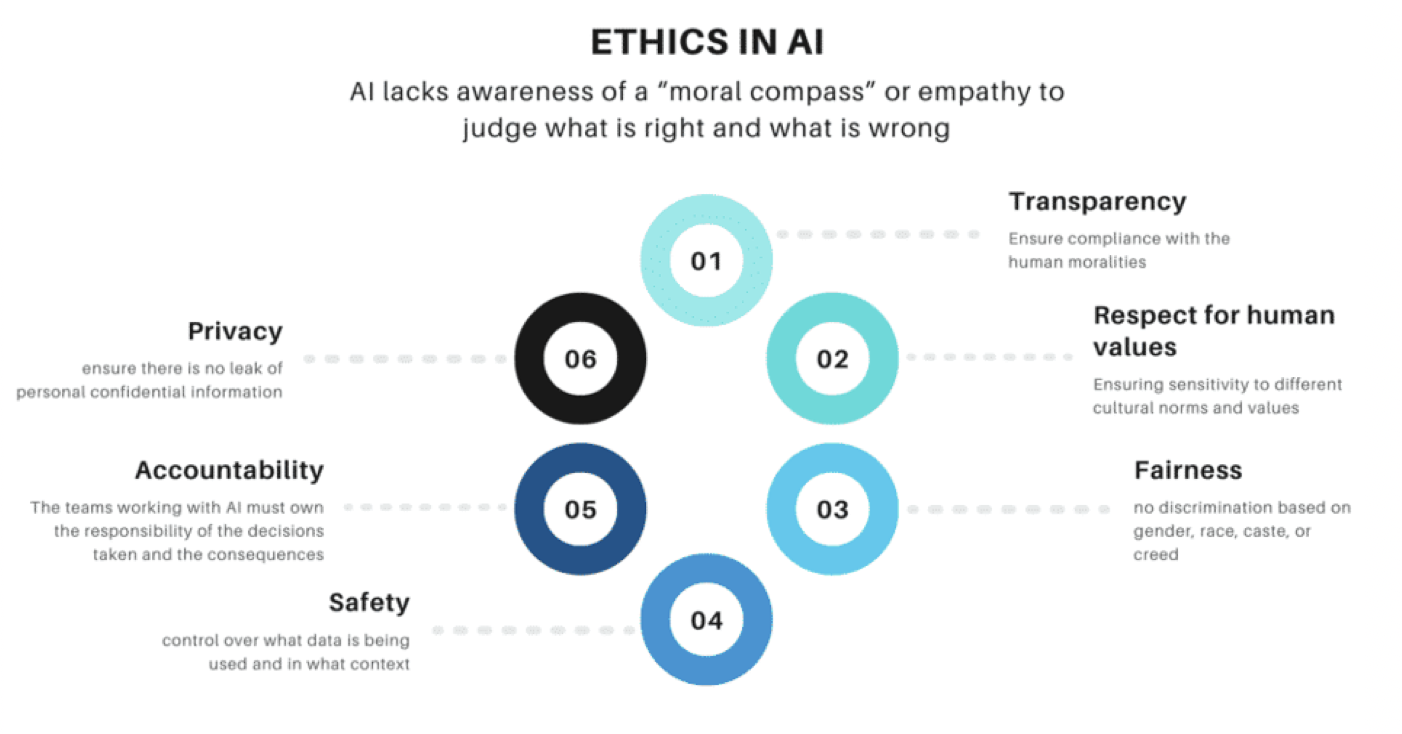

Addressing bias and discrimination in AI requires a multifaceted approach. It involves improving the diversity of training datasets, developing more transparent and accountable AI algorithms, and implementing robust testing and validation processes to detect and mitigate biases. Furthermore, involving ethicists, sociologists, and other experts in the AI development process can provide broader perspectives on fairness and equity.

In conclusion, while AI and LLMs like GPT-4 offer tremendous potential, it is crucial to address their biases and discriminatory tendencies to ensure they contribute positively to society. By acknowledging and tackling these issues, we can harness the power of AI while promoting fairness and equality.

Read More

Bias in AI systems can have great imapct on our world in a bad way, influencing decisions and perpetuating inequalities across various sectors. A study conducted by MIT Media Lab found that facial recognition systems exhibit higher error rates for women and people of color. For instance, the system misidentified darker-skinned females at rates up to 34.7%, compared to a 0.8% error rate for lighter-skinned males (Buolamwini & Gebru, 2018). This discrepancy highlights how bias in AI can lead to discriminatory practices and outcomes.

Read full essay(Buolamwini & Gebru, 2018)The roots of this bias is caused by the data used to train AI models. When training data contains biased datas the AI will reflect and even amplify these biases. This becomes particularly problematic in critical areas such as criminal justice, hiring, and healthcare, where biased AI can lead to unfair treatment and deepen societal inequalities. For example, biased hiring algorithms may disadvantage minority applicants.

In conclusion, the presence of bias in AI systems not only decreases the reliability of these AI syst but also poses serious ethical and social challenges.